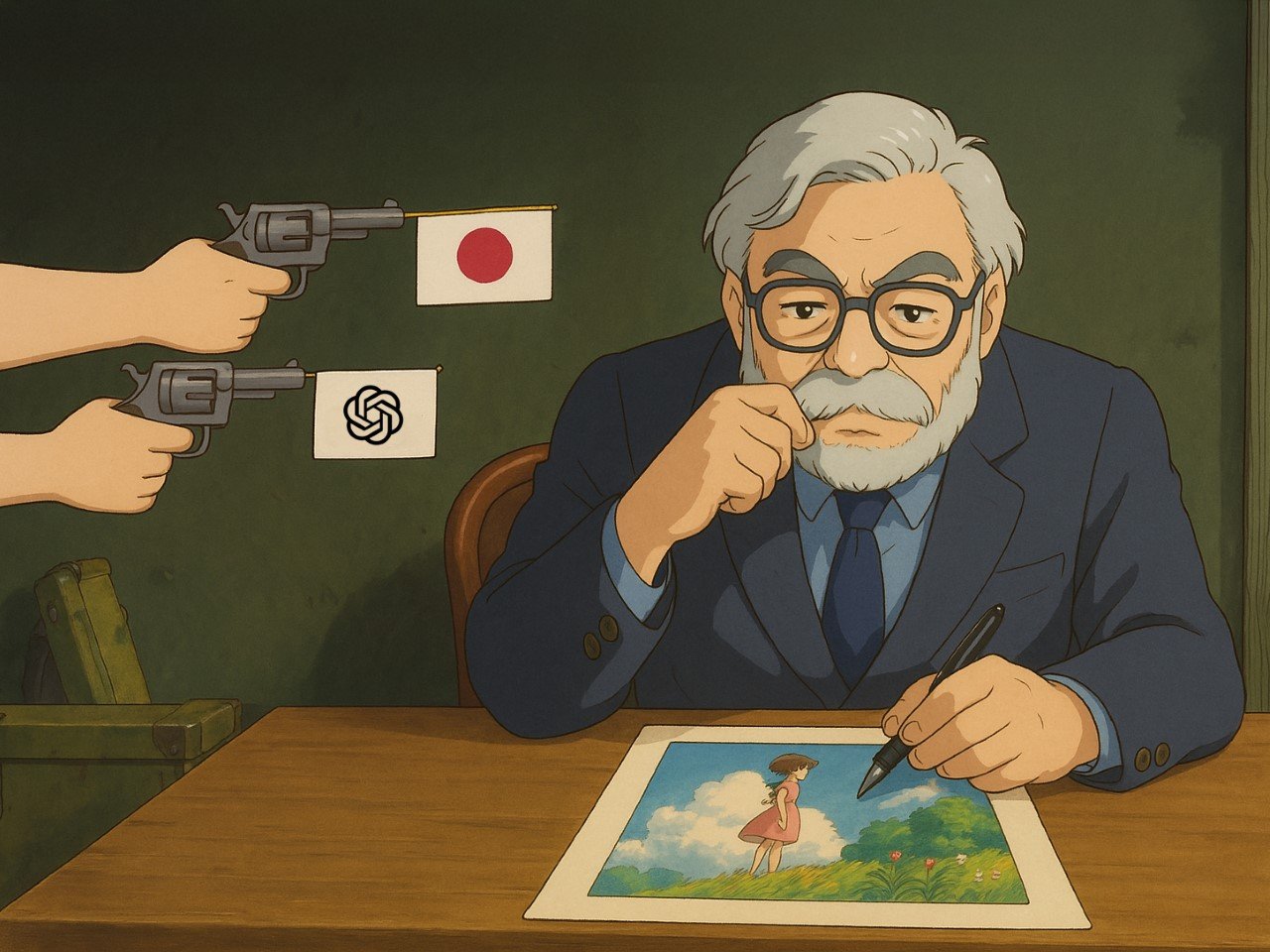

Generated using ChatGPT v4o

This is a story of betrayal, and how Japan ‘screwed over’ its most beloved artist in the name of AI supremacy/advocacy.

If you’ve been on the internet in the past week, you’ve clearly seen a flood of AI-generated photos in a certain Anime style. Referred colloquially to as the ‘Studio Ghibli’ aesthetic, it captures the artistic style of celebrated filmmaker Hayao Miyazaki’s body of work. The style went viral online after Sam Altman debuted GPT 4o’s ability to translate any photo into any style. However, for some reason, the Ghibli style went truly viral, with everyone (including even nation leaders) using it in ways that blurred the line between cute and potentially disturbing.

There’s a lot of opinion to be shared here, especially of Miyazaki himself, who absolutely hates the fact that his years of hard work have been distilled to something as abhorrently dull as a ‘Snapchat filter’. Miyazaki’s work has always been the antithesis of AI – it’s been rooted in empathy, humanity (the good kind), and a kindred spirit that prioritizes the living, the curious, and the underdogs. AI’s use of this style seems to be the absolute opposite of everything Miyazaki stood for. He’s always been a man who prioritized the artform, and famously even sent Harvey Weinstein a samurai sword with a stern warning when Weinstein asked one of his feature films be cut to a 90-minute format for easy consumption. But opinion aside, let’s talk about what’s transpired over the past week, and how Japan’s own government screwed over Miyazaki by handing Ghibli’s entire catalog to OpenAI on a silver platter.

The Digital Pickpocketing of Artistic Soul

Grant Slatton’s Ghibli-fied image is what arguably sparked the global trend

OpenAI’s latest party trick allows users to “Ghiblify” their selfies into dreamy anime-style portraits that unmistakably channel the aesthetic of Studio Ghibli. The feature has gone predictably viral, with social media awash in images that mimic the studio’s distinctive style—soft watercolor backgrounds, expressive eyes, and that ineffable sense of wonder that made films like “Spirited Away” and “My Neighbor Totoro” international treasures.

What makes this digital ventriloquism act particularly galling isn’t just that it’s happening, but that it’s happening to Miyazaki of all people. This is a man who famously handcrafts his animations, who once walked out of an AI demonstration in disgust, muttering that it was “an insult to life itself.” His revulsion wasn’t mere technophobia but a principled stand against the mechanization of an art form he believes should capture the messy, beautiful complexity of human experience.

The irony would be delicious if it weren’t so bitter: the artist who rejected computers is now being replicated by them, his distinctive visual language reduced to a prompt parameter.

Japan’s Legal Betrayal

Miyazaki himself refers to AI art as an insult to life itself.

The true villain in this artistic appropriation isn’t necessarily OpenAI (though they’re hardly innocent bystanders). It’s Japan’s bewilderingly creator-hostile copyright framework. In May 2023, the Japanese Agency for Cultural Affairs issued an interpretation of copyright law that effectively threw creative professionals under the technological bus, declaring that copyrighted works could be used without permission for AI training purposes. (The article specifies that the AI can train on copyright material if its purpose is ‘non-enjoyment’, which roughly translates to – artistic styles can be copied/replicated as long as the AI doesn’t replicate ideas/sentiments/scenes/characters from the training data)

The legal loophole hinges on a distinction that would make even the most pedantic lawyer blush: as long as the AI isn’t “enjoying” the works it’s ingesting (whatever that means for a neural network), it’s perfectly fine to feed it the entire corpus of an artist’s life work without consent or compensation. Article 30-4 of Japan’s Copyright Law provides this exception for “non-enjoyment purposes,” essentially declaring open season on creative content so you could ‘Ghiblify’ your selfie without infringing on Miyazaki’s nuanced material. As long as the AI doesn’t make photos of you standing beside Totoro, or recreating scenes from Spirited Away, it’s all kosher.

This isn’t just bad policy—it’s spectacular cognitive dissonance from a nation that has built significant cultural capital and soft power through its artistic exports. Japan, home to anime, manga, and some of the world’s most distinctive visual storytellers, has essentially told its creative class: “Your work is valuable enough to protect from human copycats, but feel free to let the machines have at it.”

The Existential Threat to Artistic Innovation

“But wait,” the techno-optimists cry, “isn’t imitation the sincerest form of flattery? Aren’t artists always influenced by those who came before?”

This argument fundamentally misunderstands both the nature of artistic influence and the economics of creative work. When a human artist studies Miyazaki’s techniques, they’re engaging in a centuries-old tradition of artistic apprenticeship. They digest, internalize, and transform influences through the prism of their own humanity, eventually developing something new. What emerges is evolution, not replication.

AI systems, by contrast, are designed specifically to replicate existing styles on demand. They don’t “learn” in the human sense—they statistically model patterns and reproduce them with variations. There’s no artistic journey, no struggle, no evolution of personal vision. The result is a flattening of artistic diversity, where new styles can be instantly mimicked and mass-produced the moment they emerge.

For emerging artists, this creates a perverse disincentive to innovation. Why spend years developing a distinctive style when an AI can copy it overnight? Why push creative boundaries when algorithms can immediately appropriate your breakthroughs? The result is a potential creative chill, where artistic innovation becomes economically irrational… because you don’t want to become a victim of your own success.

The Miyazaki Paradox

The situation creates what future generations will probably refer to as the Miyazaki Paradox: the more distinctive and influential your artistic voice becomes, the more vulnerable you are to algorithmic appropriation. Miyazaki’s style is being copied precisely because it’s so recognizable and beloved. His success has made him a target.

This paradox extends beyond animation. Authors with distinctive prose styles, musicians with unique sounds, and visual artists with particular techniques all face the same threat. Their creative fingerprints—developed through decades of practice and refinement—become training data for systems that can reproduce them without attribution or compensation.

What’s particularly galling is that this appropriation is happening to Miyazaki while he’s still actively working. At 83, he recently released what may be his final film, “The Boy and the Heron.” Rather than celebrating this capstone to an extraordinary career, we’re watching his artistic DNA being spliced into commercial AI systems without his consent.

Legal Whack-a-Mole in a Borderless Digital World

McDonalds came under fire for its use of GPT’s filter to plagiarize Miyazaki’s style to create marketing material

The global nature of AI development creates a jurisdictional nightmare for creators seeking to protect their work. While Japan has explicitly permitted the use of copyrighted works for AI training, content creators in other countries may have valid claims under their own copyright laws. This creates a complex legal patchwork that benefits primarily those with the deepest pockets—typically the tech companies, not individual artists.

Even when creators attempt to protect their work through legal means, they face an uphill battle. Copyright infringement claims require proving substantial similarity and actual copying—difficult standards to meet when dealing with AI systems that blend thousands of sources. The burden of proof often falls on creators who lack the resources to pursue complex litigation against tech giants.

The use of this filter to ‘animate’ powerful images feels like the most gross disrespect of history and its impact on life. Here, someone turned the JFK sh**ting into seemingly light-hearted artwork.

Digital Self-Defense: Protecting Your Creative Work

Despite these challenges, creators aren’t entirely powerless. Several strategies have emerged for protecting creative works in the age of AI:

Technological Countermeasures: Tools like the University of Chicago’s “Glaze” introduce subtle perturbations to images that are invisible to humans but confuse AI systems attempting to learn an artist’s style. Think of it as digital camouflage for your creative DNA.

Strategic Licensing: Creative Commons licenses with specific restrictions on AI training can establish clear boundaries for how your work can be used. While enforcement remains challenging, explicit prohibitions create legal leverage.

Embrace the Inimitable: Focus on aspects of creativity that AI struggles to replicate—conceptual depth, cultural context, personal narrative, and authentic emotional resonance. The most human elements of art remain the most difficult to algorithmically reproduce.

Collective Action: Individual creators have limited power, but collective movements can influence both policy and corporate behavior. Organizations like the Authors Guild and various visual artists’ associations are already pushing back against unauthorized use of creative works for AI training.

Blockchain Verification: While not a panacea, blockchain technology can create verifiable provenance for original works, helping audiences distinguish between human-created content and AI imitations.

To add insult to injury, the official White House Twitter Account shared this dehumanizing Ghibli-fied image of an immigrant being arrested for deportation

The Bitter Irony: AI’s Dependence on Human Creativity

Perhaps the most frustrating aspect of this situation is that AI systems fundamentally depend on human creativity to function. Without Miyazaki’s decades of artistic innovation, there would be no “Ghibli style” for ChatGPT to mimic. These systems are parasitic on the very creative ecosystem they threaten to undermine.

This creates an unsustainable dynamic: if AI systems discourage artistic innovation by making it instantly replicable, they will eventually exhaust the supply of novel human creativity they require as training data. It’s the technological equivalent of killing the golden goose—extracting short-term value at the expense of long-term cultural vitality.

The post How Japan’s Copyright Laws Allowed ChatGPT to Blatantly ‘Steal’ Studio Ghibli’s Work first appeared on Yanko Design.