ohio state university researchers consider capacity of ai models

Imagine learning the concept of a flower without ever smelling a rose or brushing your fingers across its petals. We might be able to form a mental image or describe its characteristics, would we still truly understand its concept? This is the essential question tackled in a recent study by The Ohio State University which investigates whether large language models like ChatGPT and Gemini can represent human concepts without experiencing the world through the body. The answer, according to the Ohio researchers and collaborating institutions, is that this isn’t entirely possible.

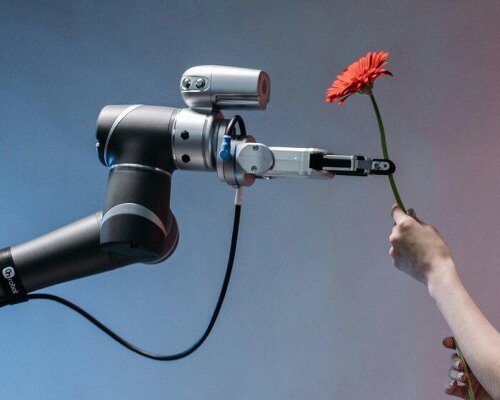

The findings suggest that even the most advanced AI tools still lack the sensorimotor grounding that gives human concepts their richness. While large language models are remarkably good at identifying patterns, categories, and relationships in language, often outperforming humans in strictly verbal or statistical tasks, the study reveals a consistent shortfall when it comes to concepts rooted in sensorimotor experience. And so, when a concept involves senses like smell or touch, or bodily actions like holding, moving, or interacting, it seems that language alone isn’t enough.

all images courtesy of Pavel Danilyuk

chatgpt & gemini might not fully grasp the concept of a flower

The researchers at The Ohio State University tested four major AI models — GPT-3.5, GPT-4, PaLM, and Gemini — on a dataset of over 4,400 words that humans had previously rated along different conceptual dimensions. These dimensions ranged from abstract qualities like ‘imageability’ and ‘emotional arousal,’ to more grounded ones like how much a concept is experienced through the senses or through movement.

Words like ‘flower’, ‘hoof’, ‘swing’, or ‘humorous’ were then scored by both humans and AI models for how well they aligned with each dimension. While large language models showed strong alignment in non-sensorial categories such as imageability or valence, their performance dropped significantly when sensory or motor qualities were involved. A flower might be recognized as something visual, for instance, but the AI struggled to fully represent the integrated physical experiences that most people naturally associate with it. ‘A large language model can’t smell a rose, touch the petals of a daisy, or walk through a field of wildflowers,’ says Qihui Xu, lead author of the study. ‘They obtain what they know by consuming vast amounts of text — orders of magnitude larger than what a human is exposed to in their entire lifetimes — and still can’t quite capture some concepts the way humans do.’

investigating whether large language models like ChatGPT and Gemini can accurately represent human concepts

the role of the senses and bodily experience in thought

The study, recently published in Nature Human Behaviour, taps into a long-ongoing cognitive science debate which questions whether we can form concepts without grounding them in bodily experience. Some theories suggest that humans, particularly those with sensory impairments, can build rich conceptual frameworks using language alone. But others argue that physical interaction with the world is inseparable from how we understand it. A flower in this context is perceived beyond its form as an object. It is a set of sensory triggers and embodied memories, for instance the sensation of sunlight on your skin or the moment of stopping to sniff a bloom, which comes with emotional associations with gardens, gifts, grief, or celebration. These are multimodal, multisensory experiences, and this is something current language models like Chat GPT and Gemini, trained mostly on internet text, can only approximate.

Speaking to their capacity, however, in one part of the study shows that AI models accurately linked roses and pasta as both being ‘high in smell.’ But humans are unlikely to think of them as conceptually similar because we don’t just compare objects by single attributes, but we make use of a multidimensional web of experiences that includes how things feel, what we do with them, and what they mean to us.

the study by The Ohio State University suggests that these AI models cannot understand sensorial human experiences

the future of large language models and embodied ai

Interestingly, the study also found that models trained on both text and images performed better in certain sensory categories, particularly in dimensions related to vision. This hints at future scenarios in which multimodal training (combining text, visuals, and eventually sensor data) might help AI models get closer to human-like understanding. Still, the researchers are cautious. As Qihui Xu notes, even with image data, AI lacks the ‘doing’ part, which consists of how concepts are formed through action and interaction.

Integrating robotics, sensor technology, and embodied interaction could eventually move AI toward this kind of situated understanding. But for now, the human experience remains far richer than what language models — no matter how large or advanced — can replicate.

in one part of the study AI models accurately linked roses and pasta as both being ‘high in smell’

The post why AI language models like chatGPT and gemini can’t understand flowers like humans do appeared first on designboom | architecture & design magazine.